User Acceptance Testing Part 2

Yesterday I described the cost and effort of creating, executing and maintaining test scripts for User Acceptance Testing. I also made the bold statement that the TrialGrid approach could reduce this effort by a factor of 10. As you might expect, we achieve this through automation. Of the three parts:

1) Create the tests

2) Execute the tests

3) Maintain the tests

The first, Create the tests, is the most technically challenging. Before we can automate creation of tests we first need to understand what tests are going to look like and how they are executed.

If we look at the test script from the first part of this series we can see that it is designed to be read and executed by a human:

Name : Check SCREENING_VISIT_DATE

Version : 1

| Step | Instruction | Expected Result | Actual Result | Comments | User | Date | Pass / Fail |

|---|---|---|---|---|---|---|---|

| 1 | Log into EDC using an Investigator role | Login succeeds, user is at Rave User home page | |||||

| 2 | Navigate to Site 123 | User is at Subject listing for Site 123 | |||||

| 3 | Create a new Subject with the following data: Date of Birth = 10 JAN 1978 | Subject is created with Date of Birth 10 JAN 1978 | |||||

| 4 | Navigate to Folder Screening, Visit Form and enter the following data: Visit Date = 13 DEC 1968 | Visit Date for Screening Folder is 13 DEC 1986. | |||||

| 5 | Confirm that Edit Check has fired on Visit Date with text "Visit Date is before subject Date of Birth. Please correct." | Edit Check has fired. |

Once executed the signed and dated test script will be kept as evidence to be reviewed by the Sponsor.

Clearly, if we want to automate the process of executing these scripts we need to keep that readability. We need a format for test scripts that is structured enough for software to execute but also naturally readable for humans.

Executable Specifications

Fortunately, Software Development has had a solution to this problem for nearly a decade. Behaviour Driven Development (BDD) is an approach to writing specifications and acceptance tests which can be read and understood by humans and executed by software. This is exactly what we are looking for. BDD doesn't specify any particular format for these tests but the most widely adopted standard is called "gherkin"[1].

Gherkin uses a simple syntax. Here is a short example:

1 2 3 4 5 6 7 8 9 10 | |

This example starts with a "Feature" declaration. It's documentation telling is about the Scenario tests which follow. At line 3 we have some free text description of the tests. At line 5 we start with a Scenario called "Buying things".

Scenarios follow a format of:

Given some background information that sets up the test conditions

When some action is taken

Then I should see some result

Acceptance tests for Edit Checks

If we convert our original test script to the Gherkin Given..When..Then structure we might get:

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

This format is a little wordy, mostly because of the need to specify Fields, Forms and Folders for Data. Gherkin includes a data table structure which can help here and we can combine it with some simple shortcuts for field selection:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

That makes the test a bit more concise.

Automated Testing

The Given..When..Then style of test is readable to a human but can also be read by software. The format isn't totally free-form. Each of the Given / When / Then steps must conform to a pattern that the software understands. Currently TrialGrid understands around 50 patterns which can check a wide range of states in Medidata Rave, not just whether a query exists. This means you can write tests which ensure that Forms and Fields are visible (or not visible) to certain EDC user Roles, to check the calculations for Derivations and to verify the results of data integrations such as IxRS feeds which enter data into Medidata Rave forms.

Tests can be read and then executed against a live Rave instance by the TrialGrid UAT module. Provide a Rave URL, study name, environment (e.g. TEST) and credentials to interact with Rave and the TrialGrid system will execute your tests against the Rave instance.

Data is entered via Rave Web Services and results verified automatically. Screenshots of the Rave page showing results of actions such as data entry and queries created can be captured for both Classic Rave and the new Rave EDC (formerly called RaveX). Results are updated in real-time as the system works through each step but you can also leave it to run unattended and view the results when it is done. TrialGrid runs these tasks in the background so you can get on with some other work.

The output is a PDF document that shows the actions taken and the results, comparing expected results against actual and providing screenshots as evidence.

Automated Maintainance

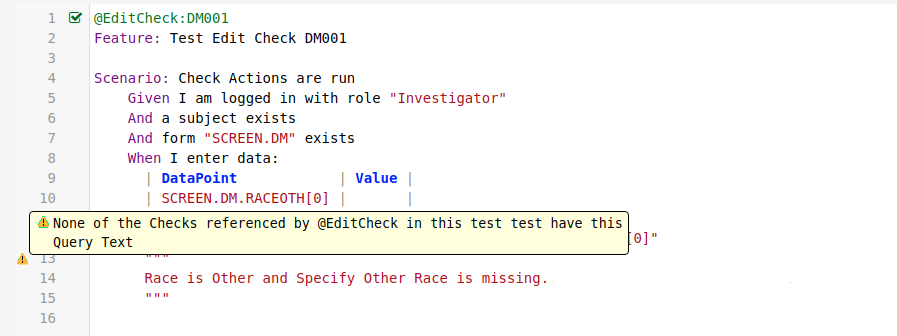

One of the challenges of test scripts is keeping them up-to-date with changes to the study. For example, imagine an Edit Check that ensures that when Race is "Other" then Race Other is specified on the demography form. The check has been programmed with the query text:

"Race is Other and Specify Other Race is missing. Please review and correct."

But in the test we are looking for:

"Race is Other and Specify Other Race is missing."

This could happen if the Specification or the programming of the Edit Check changed. But we want them to match. A human tester might be tempted to pass this test as "close enough" but automated test software looks for an exact match and will fail this test.

The TrialGrid approach can identify these kinds of problems before the test is ever run. In this example we see a warning in the Test editor which identifies that there is an issue:

Here we are giving the system extra hints about what are test relates to through the @EditCheck "tag" (another feature of the gherkin format) and referencing the DM001 Edit Check. This has several benefits:

-

By setting up a link between the Edit Check and the Test we can say whether an Edit Check has been tested or not and calculate what percentage of Edit Checks and other objects are exercised by tests.

-

The system has greater contextual knowledge about what is being tested and can help with warnings like the one shown here.

TrialGrid performs similar validation of data dictionary values, unit dictionary selections, Folder, Field and Form references and more. This capability reduces the effort of maintaining tests and supports risk-based approaches where you don't run tests because "nothing has changed and this test is still valid". This function can tell you something has changed and this test may not be valid any more.

Summary

In this second part of the three part series on Automated User Acceptance Testing we briefly covered the formatting of the tests, how they are executed and how the system helps you ensure that tests stay in synchronization with the Edit Checks, Forms and other objects that they are supposed to be testing.

These features make the execution and maintenance of tests much easier and faster but we are still left with the huge challenge of writing these kinds of tests for hundreds of Edit Checks. In the last part we'll cover how the TrialGrid system can automate that part, creating tests in seconds that would take a human hundreds of hours of effort.

Come back tomorrow. But if you want to see this system in action don't forget our Free Webinar on January 10 2019.

Registration at https://register.gotowebinar.com/register/2929700804630029324

Notes:

[1] Why "gherkin" is a story in itself but in summary, "gherkin" is the format used by a software tool called "cucumber" and it is called cucumber because passing tests are shown in green text so the idea was to get everything to "look as green as a 'cuke". I know, hilarious.