Save 20-30% on Edit Check Builds

Andrew and I are on a mission to reduce the cost and effort of building Rave studies by 50%. It's an ambitious goal but nothing really worth doing is easy.

One of the most costly areas of study build is the writing and testing of Edit Checks. So lets take a look at Edit Checks and where the costs are.

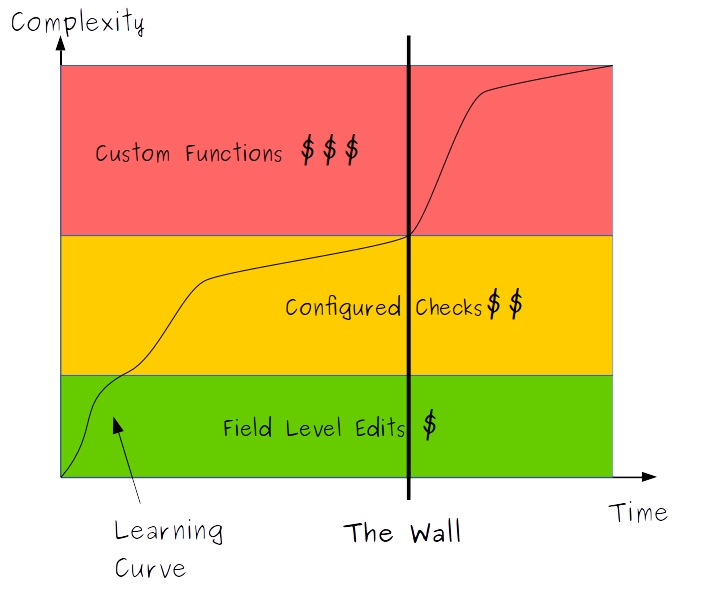

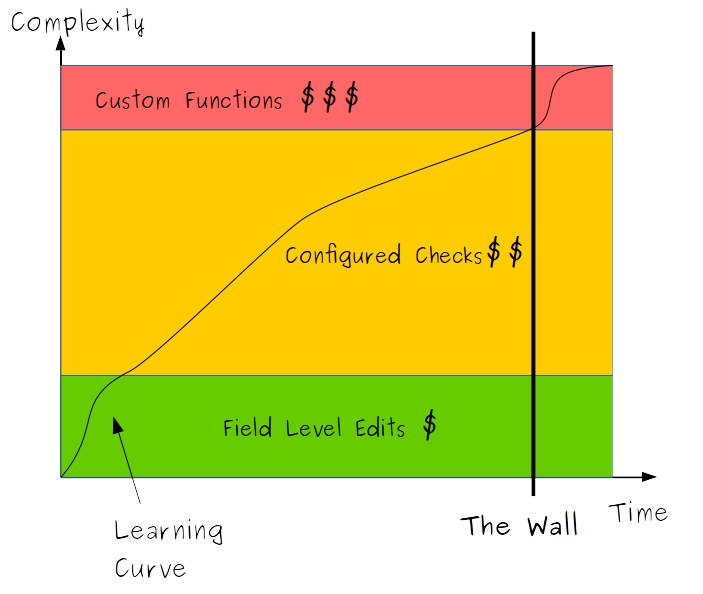

Three levels of Edit Check logic

In the previous post we looked at the three levels of edit check logic:

- Field Checks (Range, IsRequired, QueryFutureDate etc)

- Configured Checks (Rave Edit Checks)

- Custom Functions

Field Checks can be set up with a few clicks and some data entry for expected high and low ranges. They are extremely fast and easy to set up and require little or no testing since they are features of the validated Rave system. Field edit checks are so easy we're giving these a value of $1 for all the checks set on a field (Is Required, Simple Numeric Ranges, Cannot be a Future date etc). That doesn't mean they literally cost $1 to include in your study. Depending on how you build, staffing costs, how luxurious your offices are etc your price will vary. $1 is just a good baseline figure to compare other costs against.

Configured Checks are written using Rave's Edit Check editor which uses a postfix notation (1 1 + 2 isequalto). Rave Edit Checks are flexible and very functional but every Edit Check that is written has to be specified, written and tested making it more expensive to create than a simple Field Check. You also need a more skilled study builder to write a Configured check. So let's say, $10, on average, to create a configured edit check. Again, $10 is not a literal cost, it's just a comparison.

Lastly we have Custom Functions. These are written in C#, VB.NET or SQL and require some level of true programming expertise. Custom Functions are the fallback, the special tool in the toolbox for the truly complex situations. Besides the difficulty of hiring (and keeping) good programmers in the current technical market Custom Functions have to be specified, reviewed for coding standards and performance impact as well as tested. We'll say, conservatively, $50 for the development of a Custom Function. Once again $50 is just a relative cost to the $1 field check since the average Custom Function is at least 50x more complex than a field check.

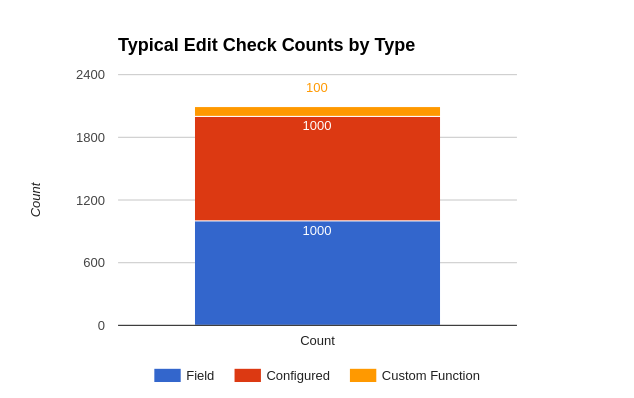

Study Averages

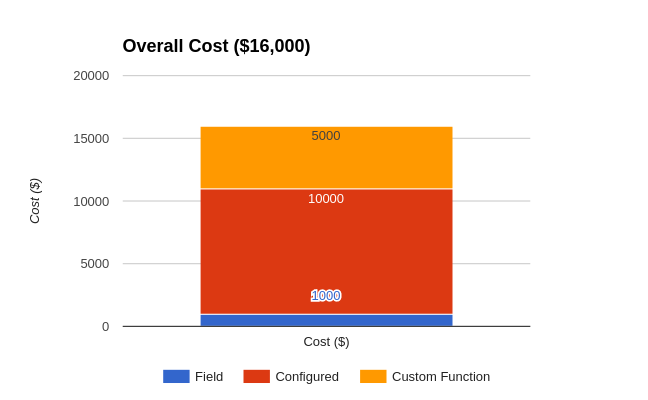

There is no such thing as an average study the size and complexity of a study depends on it's Phase, Therapeutic Area and many other variables. But we have seen a lot of trials over the years so we'll illustrate costs with what we think is fairly typical: A study with around 1,000 data entry fields, 1,000 Configured Edit Checks and 100 Custom Functions.

Given those numbers we can draw a graph that shows how the Edit Checks in our study stack up.

A graph of the costs is also enlightening:

The bulk of the costs is in the Configured Edit Checks but those 100 Custom Functions account for 30% of the cost.

How to reduce the cost?

Field Edits are so easy there is little that could be done to make creating them more efficient but there is scope for improvement in Configured Edits and Custom Functions. How could we reduce the costs of those?

At TrialGrid we're attacking this challenge with CQL, the Clinical Query Language. CQL is an infix format for Rave Configured Edit Checks which is easy and fast to write and which has built-in testing facilities.

An Edit Check with CQL (infix) logic like:

1 | |

would be translated into a Rave Edit Check (postfix) logic like:

1 2 3 4 5 6 7 8 9 10 11 | |

CQL also includes a set of built-in functions that automatically generate Custom Functions for you.

For example, We have been asked for an Edit Check that determines if a text field contains non-ASCII characters. Using it in a CQL expression is easy:

1 | |

The TrialGrid application takes care of generating the Custom Function. You'll still need some bespoke Custom Functions but fewer and fewer as time goes on and we build more into CQL.

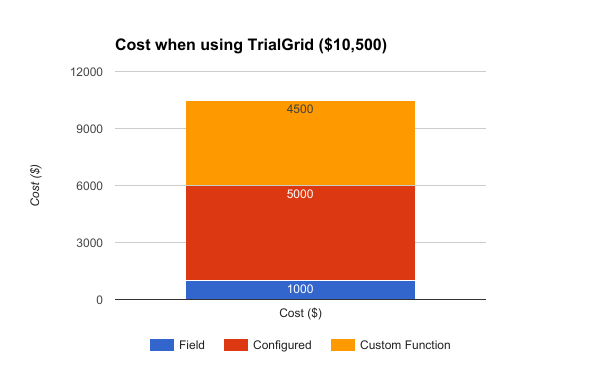

We (conservatively) estimate that CQL can save a Clinical Programmer or Data Manager 50% of the effort of writing Configured Edit Checks and that the generation of Custom Functions will reduce the number of Custom Functions that have to be hand-written by at least 10%. When we plug these numbers into our costings for our example study the price drops to $10,500 from $16,000 a saving of 34%

Who wouldn't want that?