Continuous Validation (2019 Edition)

Back in 2017 I wrote a blog post outlining our Continuous Validation procedure. This week Hugh O'Neill wrote an article on the PHARMASEAL Blog describing their process of Continuous Validation and it sparked some conversation on LinkedIn.

While our process remains much the same, it has been refined and tested through audits and I hope that an update to how our process works will be helpful to other organizations in adopting a Continuous Validation process or at least in accelerating their existing process.

Our practice is a refinement of an approach used by our former colleagues at Medidata Solutions. We didn't invent it and we are still open to learning new and better ways to perform validation.

Who are we?

To understand our process it helps to understand what kind of company we are.

- We have a small, very experienced team. We need everyone in the team to be able to do everything - coding, database admin, code review, testing etc. We don't have silos of responsibility.

- We are a geographically distributed team. We don't have a central office or any company owned physical assets. The company doesn't own a filing cabinet - it's all in the cloud with qualified SaaS providers. Everything has to be accessible via the web - our product, our systems, our procedures and policies and our validation documentation.

- We don't handle Subject/Patient Clinical Data. That makes us inherently less risky than, say, an EDC system.

- We know we are not world-leading experts in Validation. None of us have an auditing background. Our interactions with Auditors are an opportunity to learn and improve. Here we describe what is working for us.

If you don't have a Quality Management System, you're Testing not Validating

I said this before but it's worth repeating. Validated software is software that has been developed within the guiding framework of a Quality Management System (QMS). The QMS describes what you do, why and how you do it. An Auditor will compare your documented procedures to evidence that you followed the procedures.

Developing a QMS is beyond the scope of a blog post, but write to me if you're interested in that topic.

Continuous Integration

ThoughtWorks defines Continuous Integration as:

Continuous Integration (CI) is a development practice that requires developers to integrate code into a shared repository several times a day. Each check-in is then verified by an automated build, allowing teams to detect problems early.

"Automated Build" means that when changes are received from a developer they are automatically compiled into a working system. If that process succeeds, further automated tests are run on the system to see that it works as expected. These steps are usually organized as a pipeline with each step performing a test and then passing onto the next step in the pipeline if the tests pass.

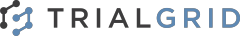

Here we see part of the TrialGrid CI pipeline showing 3 stages, some with multiple parallel tasks.

Continuous Delivery

Continuous Delivery expands on this idea with the end of the pipeline being a package that can be deployed to customers at the push of a button.

Commit Code > Compile > Run Tests > Assemble Deployable Package

Organizing a development team so that it can use Continuous Delivery is a big undertaking. It means automating every step in the process so that the output deployment package contains everything required. This is easier if you don't have hand-offs between groups. If your process involves programmers writing code and then technical writers authoring the help material this will be hard to coordinate. Much better if the help material is written alongside the code and they are dropped into the pipeline together.

Continuous Validation

Continuous Validation takes the idea of Continuous Delivery one step further and adds an additional output to the pipeline - a Validation Package that bundles up all the evidence that the software development practices which are mandated in the Quality Management System have been performed.

We want to do more than just drop a zip file on an auditor, by collecting evidence as data (just as Hugh O'Neill describes in his blog post) we can create a hyperlinked website, a Validation Portal which is easy to navigate and contains all the evidence required to perform a virtual audit of the software.

Evidence

Auditors have a simple rule:

If there is no evidence that something happened, it didn't happen.

Some of this evidence is generated as part of the pipeline - the output of tests for example but evidence of our activities such as code review need to be fed into the pipeline as data.

When we do something mandated by our Quality Management System we want to record that we did it. Ideally recording this evidence should not put a greater burden on us, it should be a by-product of the action.

Requirements and Traceability

In validated software everything starts with a requirement. When a user points to a widget on a screen and says "Why is this here?" you should be able to direct them to a written requirement that explains its function.

This will lead to the question "How do I know what you have implemented is functionally correct?" and you should be able to guide them to a plan for testing that feature and from there to evidence that the plan was executed.

Requirement > Test Plan > Evidence of Testing

Maintaining this traceability matrix is a challenge and it's a core element of the Validation Package that auditors will inspect so this too has to be captured.

Tools

To organize our development efforts we use GitLab. GitLab is an open-source product which you can install yourself or use their pay-to-use hosted version. It combines many of the tools required for the software development process:

- Source code repository

- Bug tracker / issues list

- Continuous Integration pipeline management

And a lot more besides. GitHub and BitBucket are similar products.

We use git for managing changes to source code. All you really need to know about git is that it:

- Allows you to pull down a complete copy of the "master" copy of your source code.

- Tracks every change you make

- Packages up a set of changes into a "commit" with a comment on why these changes were made

- Manages merges of your committed changes into the master copy

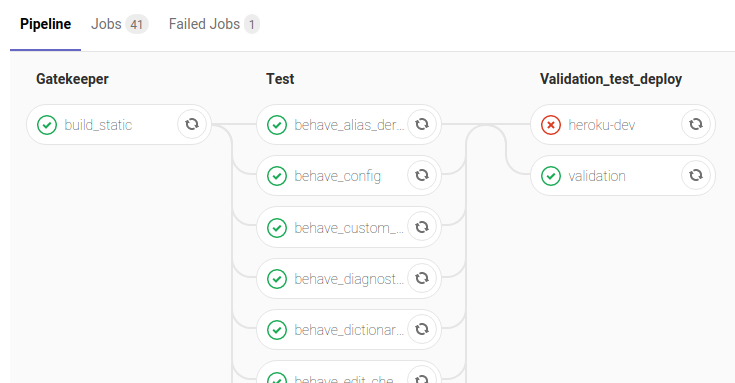

GitLab provides a way to coordinate this activity and collect data on it. When I want to merge changes into the master copy I create a record in GitLab called a "Merge Request". Here's a screenshot:

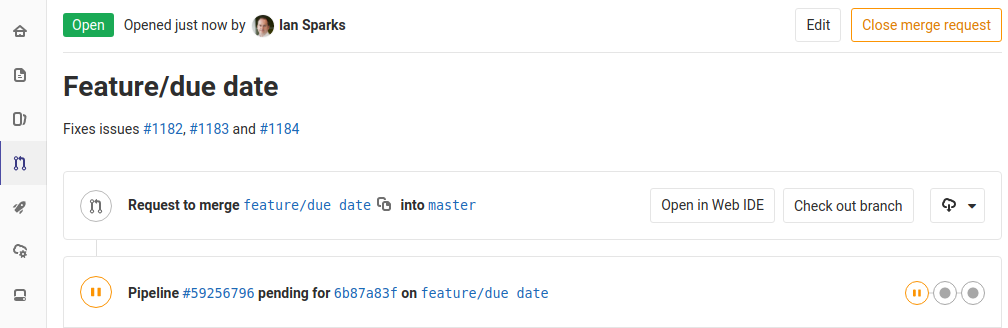

Notice the #1182, #1183 and #1184 - these are references to Issues stored in the GitLab issue tracker. We also put these references into our commit messages:

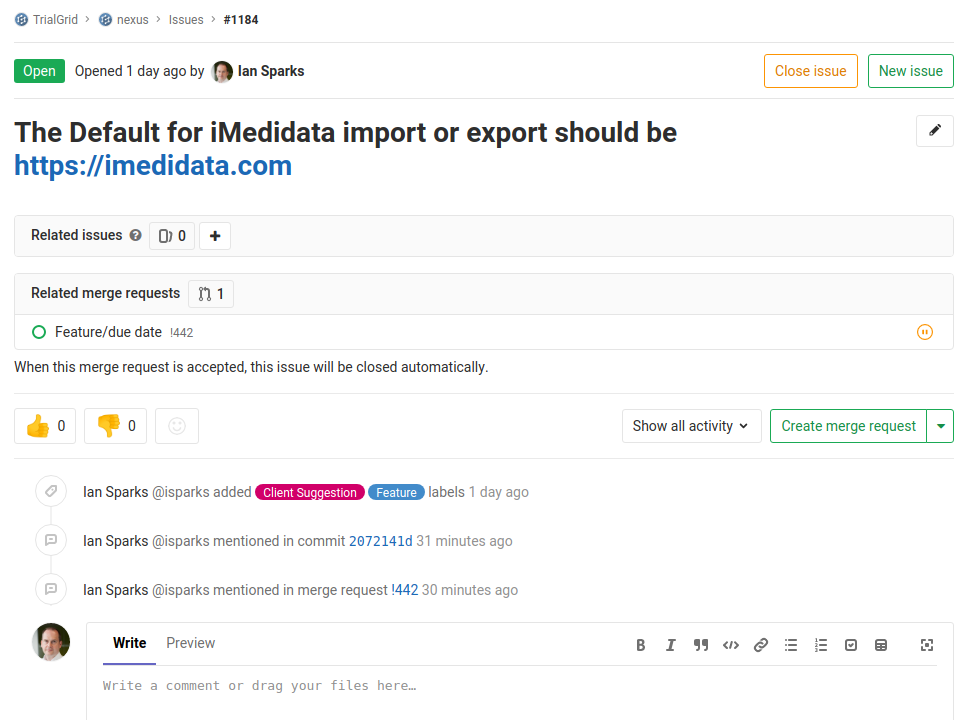

Here's the related Issue for #1184:

We use GitLab issues both for bug reports and for features. You can see that this one has been labelled as a Client Suggestion and as a Feature. You can also see that GitLab is finding references to this Issue in the merge request and in the commits which are part of that merge request.

Traceability from tests to requirements

Using Issue reference numbers gives us traceability between the requirement for a feature (the Issue) and the changes to the code that addressed that feature. This is cool but probably too much information for an Auditor. They want to see a link between this requirement, a test plan and testing evidence.

We use human-readable (and computer executable) tests to exercise UI features and we tag them with @issue to create a link between a test and an issue.

Here's a test definition (a plan) for the issue 1184:

1 2 3 4 5 6 | |

When this test is run it will click through the TrialGrid application just as a human would and take screenshots as evidence as it goes.

The programs that generate our validation package extract issue references from the Merge Requests and Commits and match them with the test definitions that exercise those issues and their related test evidence.

Here's a short sequence that shows the linkage between tests / evidence / issues and merge requests in the validation package.

Using this method it is easy to maintain traceability and the hyperlinked navigation has been well received by auditors

used to dealing with requirements documents written in Word, traceability matrices managed in Excel spreadsheets and

evidence in ring-binders.

Code Review

At the end of the previous video I scroll through the commits and their comments which formed part of a Merge Request.

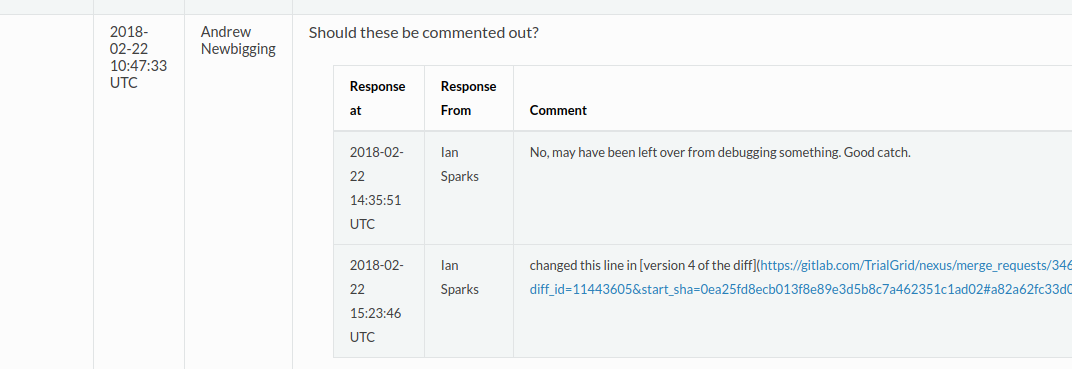

An example comment:

When a Merge Request is created it displays all the changes made to the code and provides an opportunity to review and make comments. A Merge Request is blocked and code cannot be merged into the master copy until all comments have been resolved. This is our opportunity to perform code review. All comments and responses, including changes to the code as a result of comments are captured by GitLab.

We use GitLab API's to pull out the comment history on Merge requests and include them in the validation package as evidence of code review and of acceptance of the changes made to the code.

Generating Validation Packages

At TrialGrid we practice Continuous Delivery. That doesn't mean that if a code change passes our pipeline it is deployed direct to the Production environment. We decide when to deploy to production and trigger the automated deployment at times agreed with our customers.

We do deploy immediately to our Beta environment when the CI pipeline succeeds and a Merge Request is approved.

In addition, every Merge Request that passes the pipeline is deployed to our Development environment where it can be smoke tested by the reviewer before they approve the contents of the Merge Request.

We generate a validation package for every run of the pipeline. We only keep and sign-off on packages that relate to Production deployment but we are constantly generating these packages.

In effect, we are making the validation package part of the software development process. If a developer changes code in such a way that it affects the generation of the validation package then the pipeline will fail. The software may be fine, all tests may pass but if the validation package isn't generated correctly the whole pipeline fails and the developer must fix it.

For production releases we have a manual process of review for the validation package and sign off via electronic signature. Our CI pipeline has around 40 steps, many running in parallel, and completes in about 60 minutes. The validation package it generates weighs in at 1.3GB with about 500MB of images. This gets deployed automatically to Amazon S3 as a password protected resource ready for virtual audit.

Summary

I hope this glimpse into our Continuous Delivery / Continuous Validation approach is helpful. We are constantly refining this process and welcome new ideas.

In customer audits we have been able to demonstrate that we have our software development process under control and provide evidence that we are doing the things required by our Quality Management System.