Tracing Bug Fixes to the code

One of the key things that auditors want to see in validation documents is traceability between features, tests of those features and the evidence that the tests were executed. Normally this is presented as a requirements specification, a set of test scripts which reference the specification and records that the test scripts were executed. For product management and for an auditor this provides assurance that you planned what you wanted to create, you developed acceptance criteria and you tested the results.

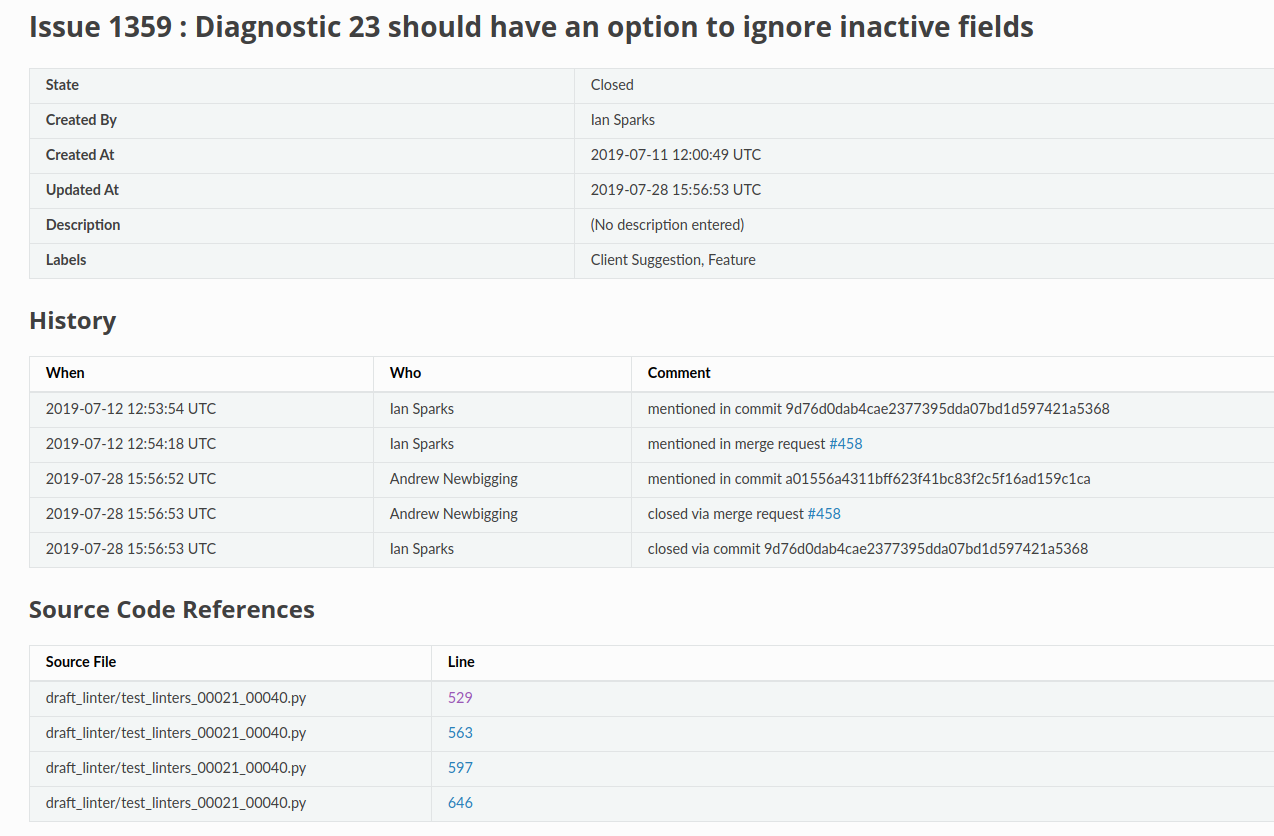

At TrialGrid we record our specifications as Issues in a GitLab repository and we reference the unique identifiers for these issues in our automated test scripts and in commit messages. I outlined this in our recent blog post. For this discussion the important thing to know is that for every Issue we have a summary page in our validation portal which pulls data from GitLab to display a description, history and links to requirements tests for that Issue.

What about traceability between bugs and bug-fixes?

We also use Issues to track bugs. Some bugs have a visual component and we will include tests that these issues are fixed as part of our automated requirement test scripts. These "Bug Issues" then have tracability from the Issue to the tests that show they are fixed just like a "Requirement Issue".

However, not all bug fixes have a visual component so we would end up with some Issue pages with no hyperlink traceability to any proof that the bug had been fixed and tested.

Is this a big deal? In my experience, organizations don't provide this kind of traceability. Bug fixes are treated separately to requirements and references to bugs fixed go in the release notes. There may be a unit test that proves the bug was fixed but maintaining a traceability matrix between unit tests and bugs fixed is more work than anyone is looking for.

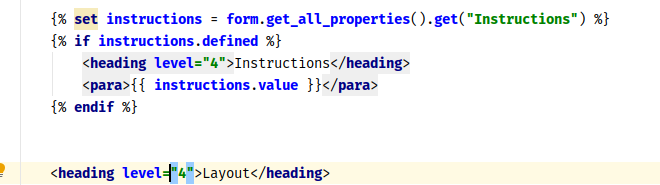

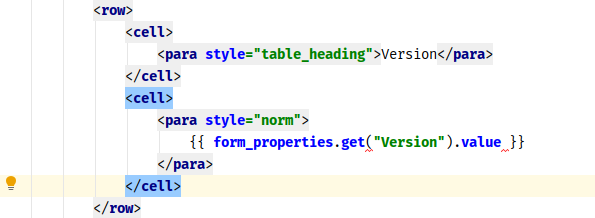

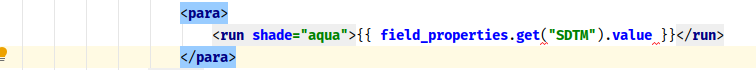

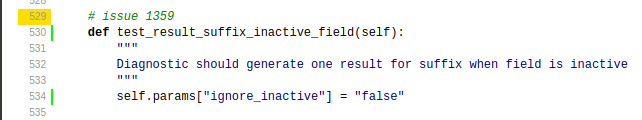

Still, it bothered us that there was this lack of traceability in our validation docs. In our unit testing code we would make reference to an issue. For example:

1 2 3 4 5 | |

With a comment like issue 1359 we were making reference to the issue - what we needed was a way to

find these kinds of references and match them up with the pages in the validation docs with a hyperlink

to the code.

Code is data

Our source code coverage analysis system already generates hyperlinked code listings which we include in our validation package. These listings allow us to create hyperlinks which will take the reader to an exact line of a particular file. What we needed was a way to read all our source code, identify the issue comments and make an association between an issue and the file and line where the issue is referenced.

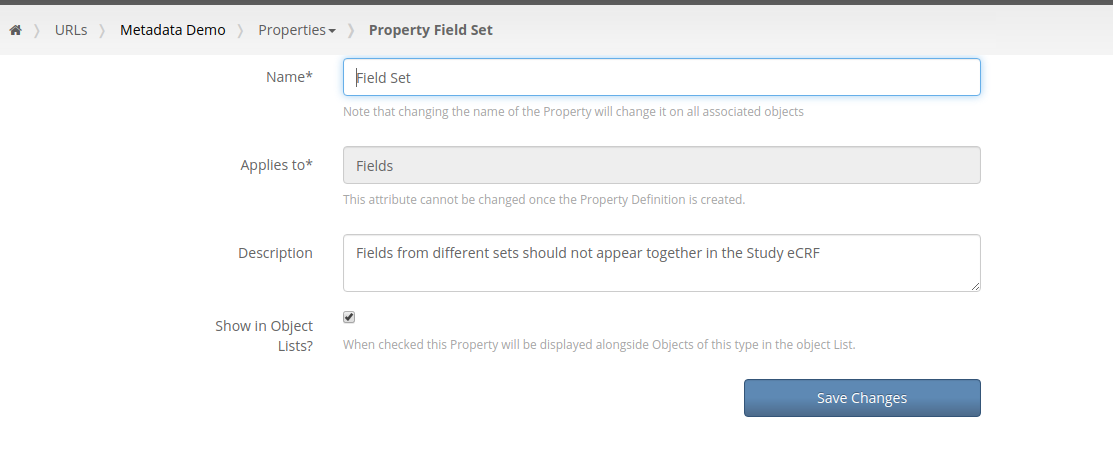

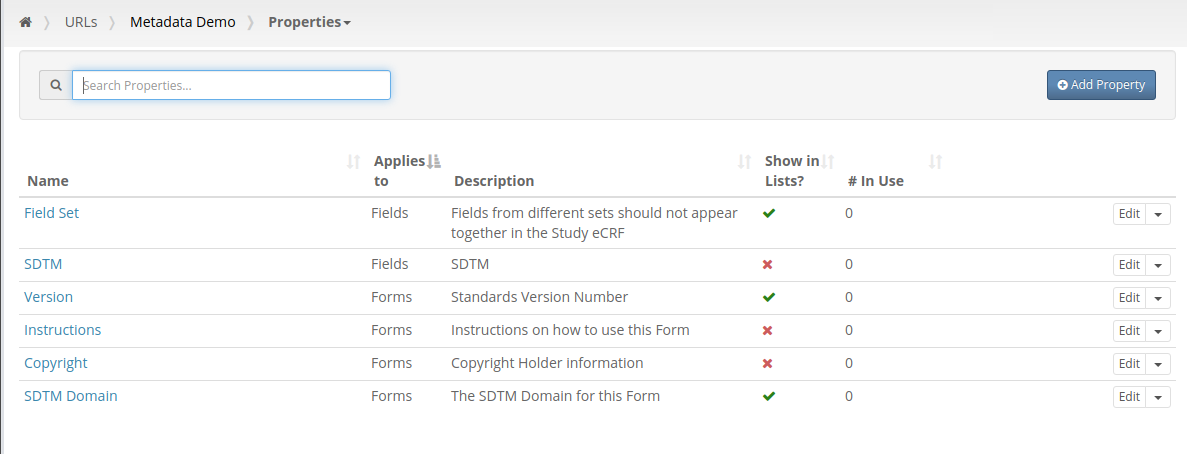

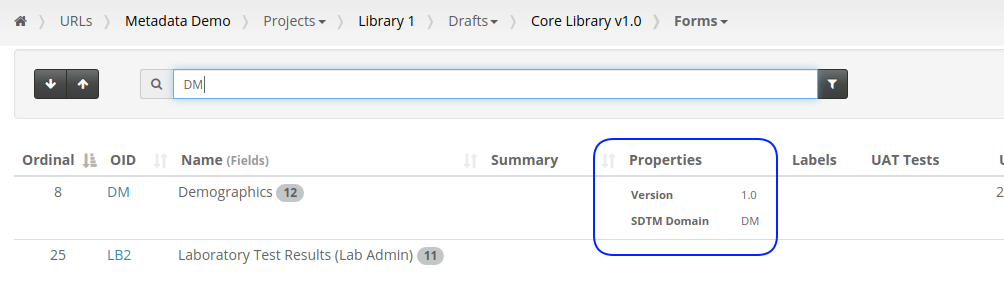

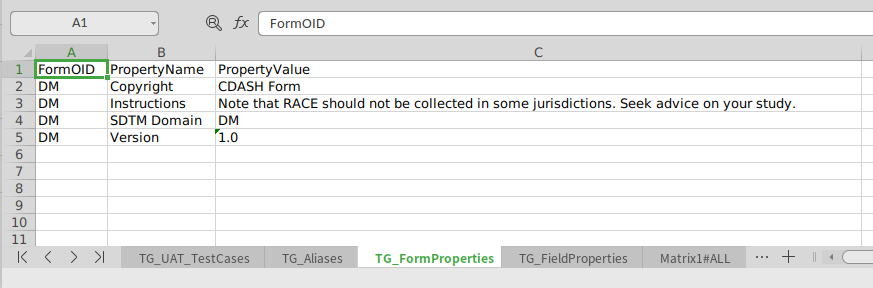

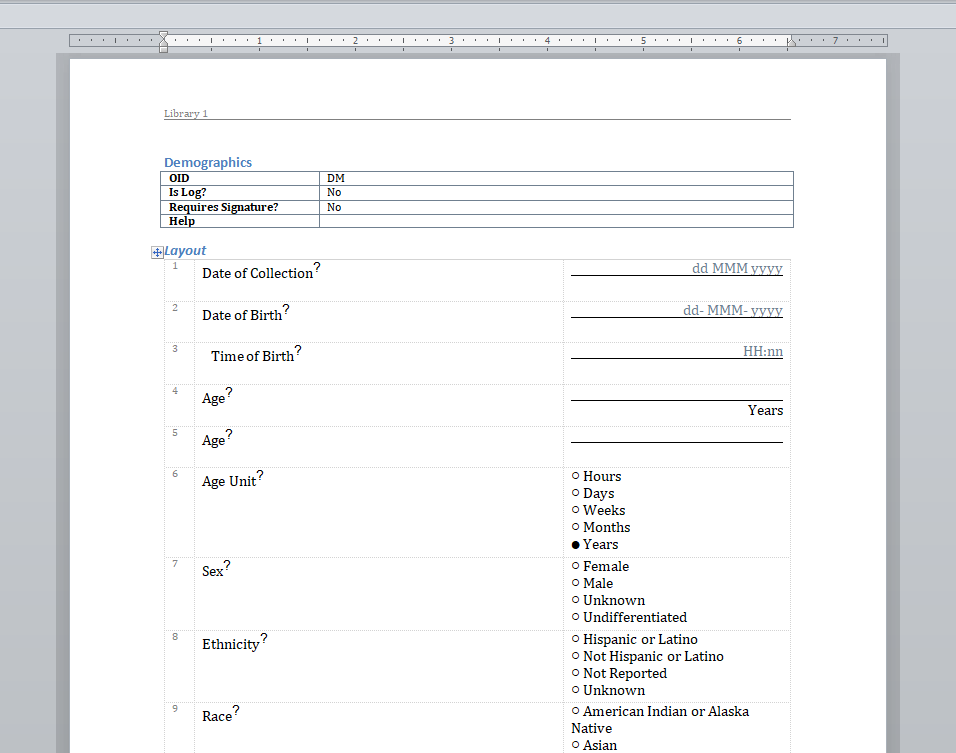

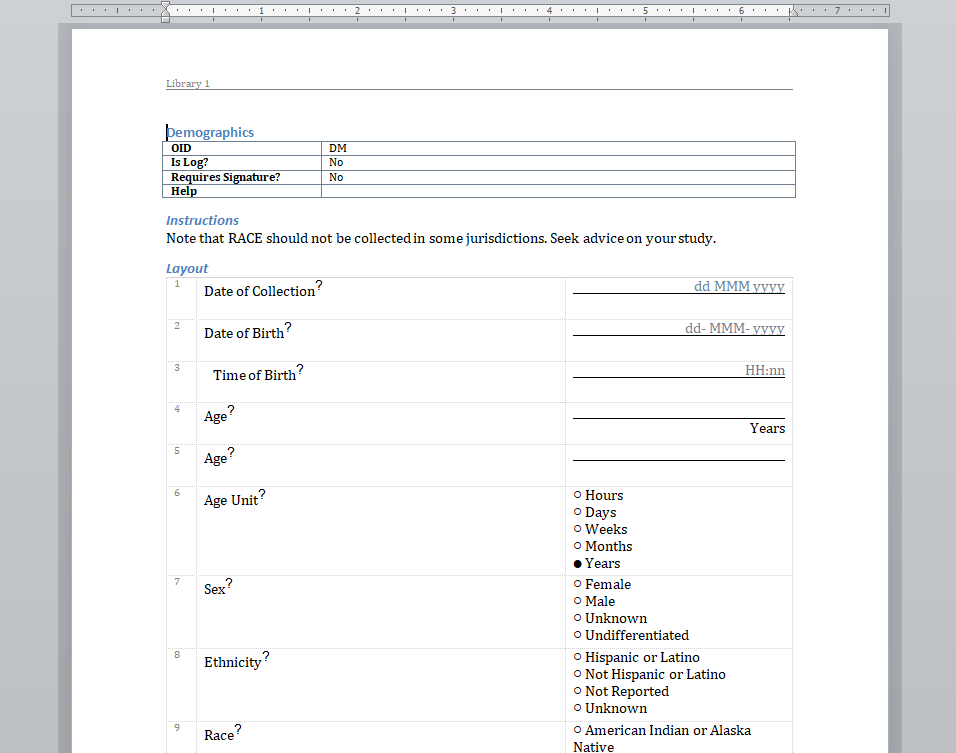

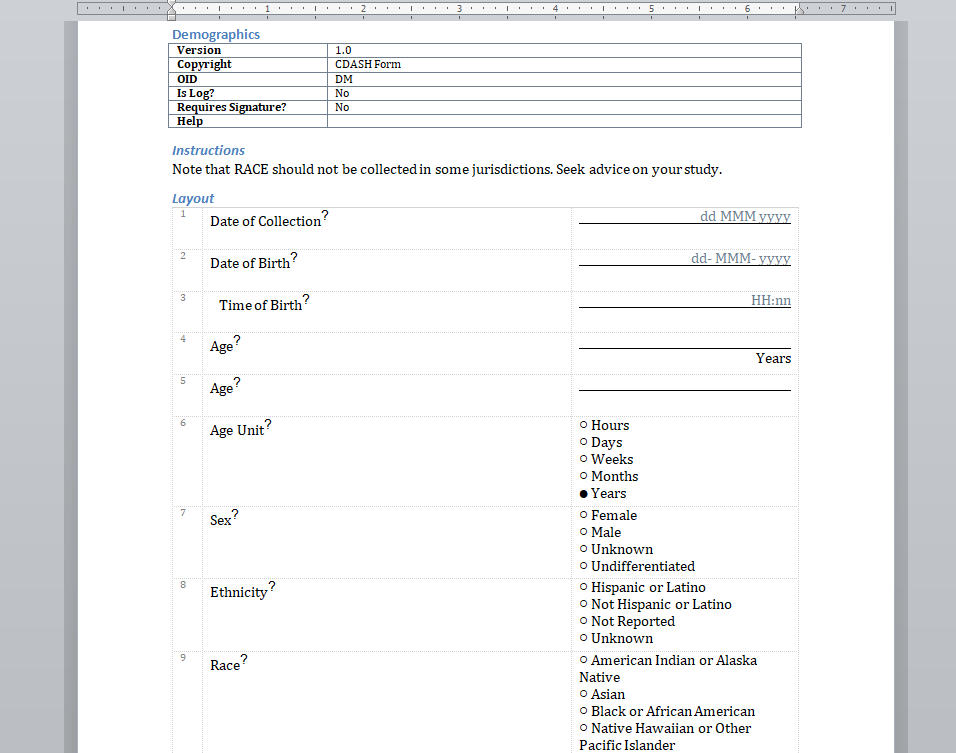

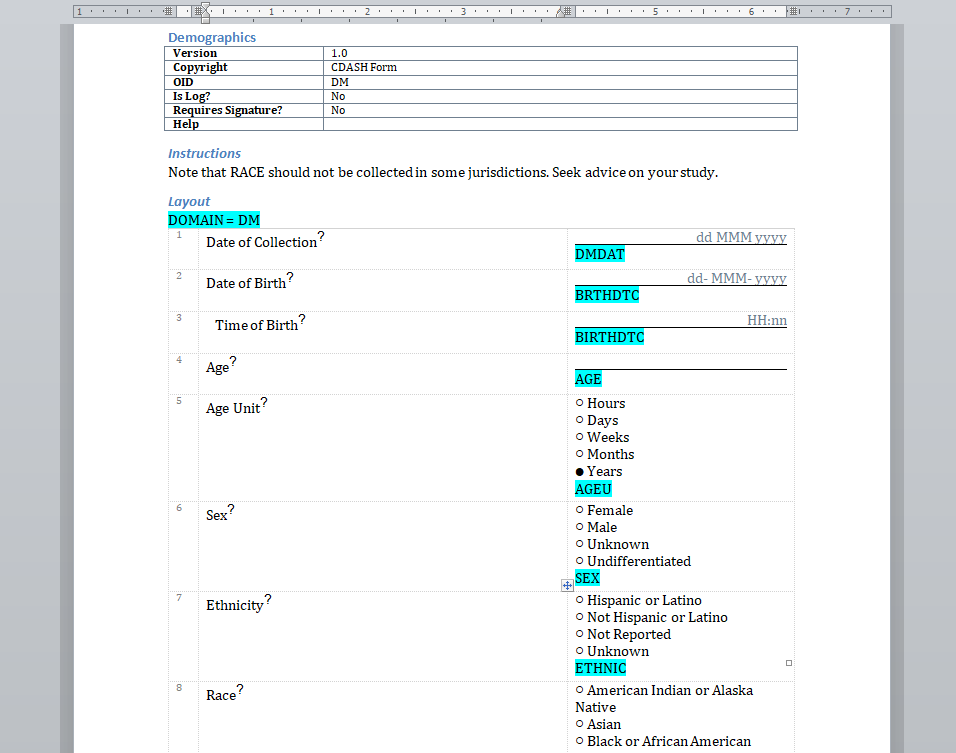

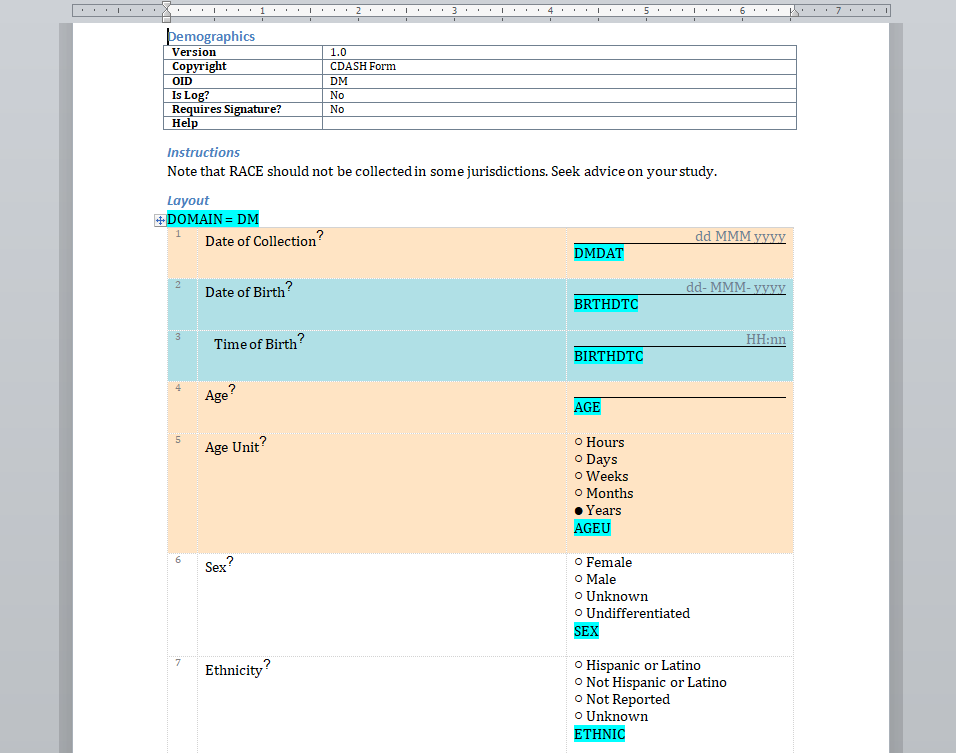

Happily for us, python has good support for analysing source code so we were able to get this working in just a few hours. The end result is hyperlinks from an issue page:

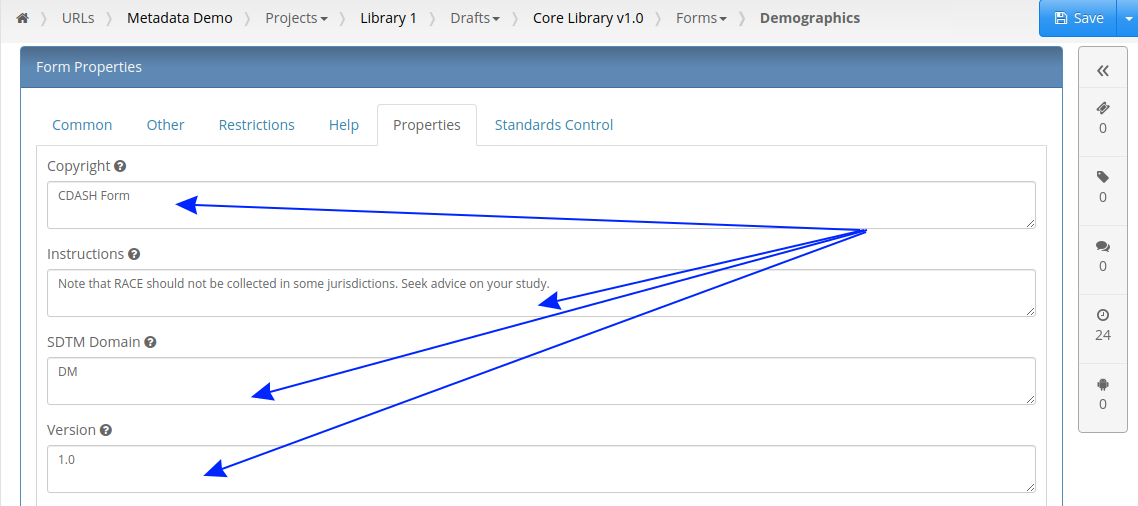

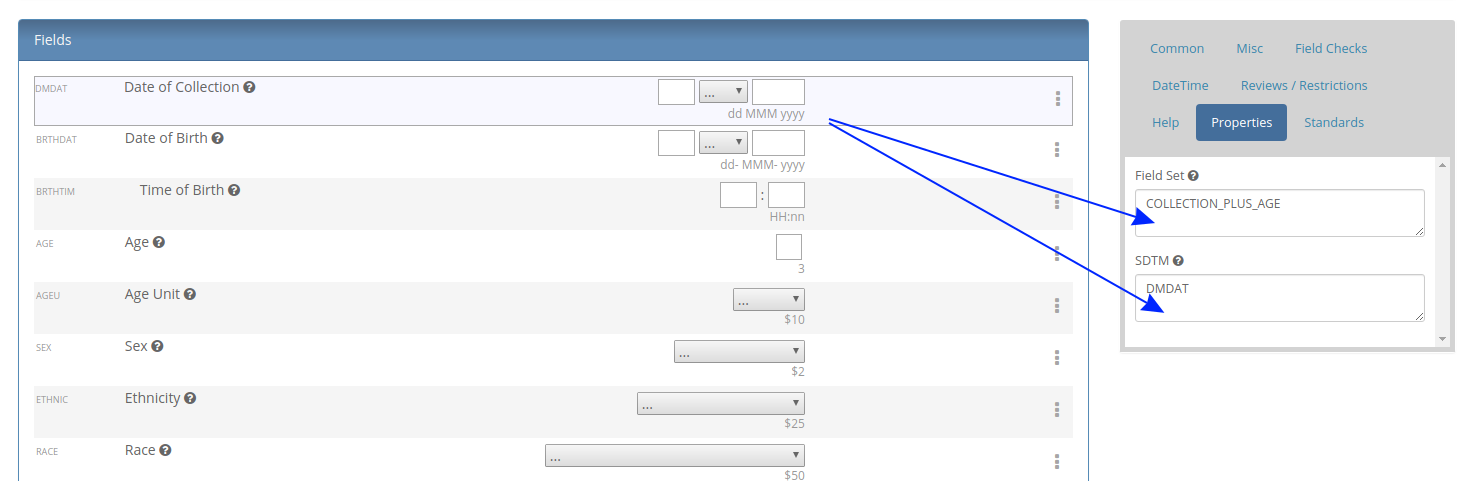

to the source code that exercises the issue:

Summary

We don't expect that auditors will want to review the source code of unit tests but we think that providing traceability to bug fixes is useful and meets the needs of auditors who want to see evidence.

We're not yet done with improving traceability in our validation package. More on our ongoing efforts in a future blog post.